Tuesday 17 June, 2014, 15:21 - Satellites

Posted by Administrator

Posted by Administrator

There is growing evidence to suggest that Google is planning to enter space by launching satellites of its own. Two seperate pieces of news point in this direction. Firstly, Google has announced plans to purchase Skybox. Skybox operates low-earth orbit satellites whose purpose is to take high resolution images, of exactly the kind that are used by Google maps. Following the recent lifting of US government restrictions on the use of images with better than 50 cm resolution, the move by Google to own its own earth imaging satellites makes complete sense.

There is growing evidence to suggest that Google is planning to enter space by launching satellites of its own. Two seperate pieces of news point in this direction. Firstly, Google has announced plans to purchase Skybox. Skybox operates low-earth orbit satellites whose purpose is to take high resolution images, of exactly the kind that are used by Google maps. Following the recent lifting of US government restrictions on the use of images with better than 50 cm resolution, the move by Google to own its own earth imaging satellites makes complete sense.Secondly, there is talk of Google becoming involved in the delivery of broadband via satellite through a network called WorldVu. WorldVu, if it goes ahead as described, suffers from many of the same problems that O3B will, but has a lot more to deal with due to its use of Ku-Band as opposed to O3B's use of Ka-Band.

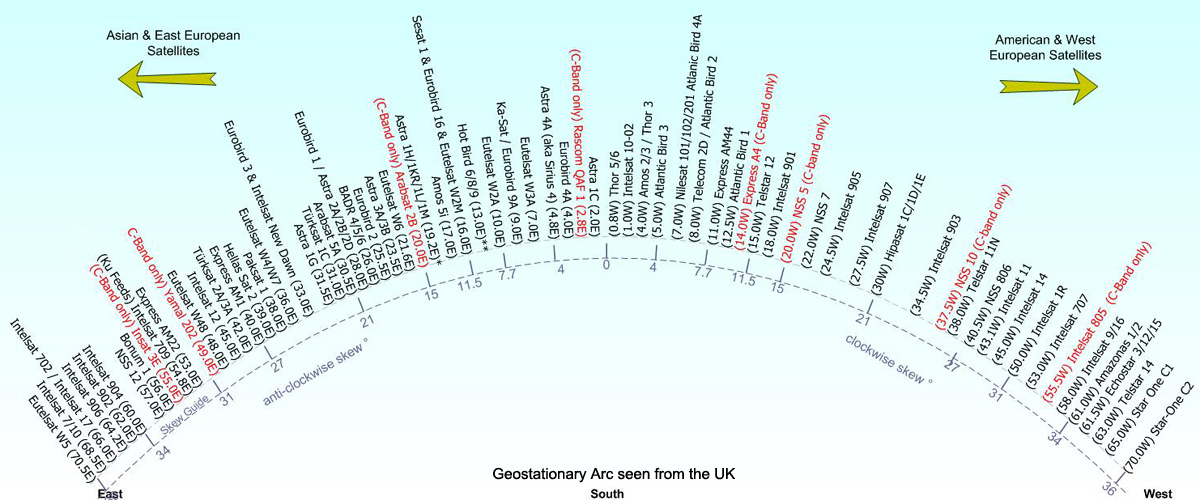

Why is the use of Ku-Band more complex? Ku-Band is already very heavily used for satellite broadcasting, as well as for a number of satellite broadband networks (e.g. networks such as Dish in the USA). As it is proposed that the WorldVu network will be non-geostationary (e.g. the satellites will move around in the sky as seen from the Earth), the downlinks will have to be switched off when the satellites are in a position that could cause interference to existing geostationary satellite services (which will generally be when the WorldVu satellites are over the equator, and whilst a few degrees either side of it). This is made worse because any uplink that could cause interference also needs to be switched-off as well if it is pointing at the geostationary arc. The same is true of O3B, but the more dense packing of Ku-Band satellites will make the situation far more complex.

For example, there are over 60 Ku-band satellites visible in the sky in the UK. As the arc as viewed from the UK is 70 degrees from end-to-end, this means there is approximately one satellite every degree.

Source: satellites.co.uk

All of this switching on and off every time a satellite is over the equator (and, of course, when a satellite disappears beyond the horizon), and the requisite requirement to connect to a different satellite at those times to maintain a connection is both complex and also creates the environment for tremendous problems with 'dropped calls', if handover between satellites fails for any reason. Similarly, this switching will cause severe jitter (changes in timing) which in itself can cause problems for some internet applications (e.g. streaming).

Finally, and probably the weirdest issue with WorldVu, is the antennas that are planned to be deployed for user ground stations. It is suggested that Google plan to use antennas based on meta-materials. O3B have also, apparently, signed a deal to work on the development of meta-material based antennas. But at present such antennas have been proven only at Ka-Band (and then only in a developmental and not commercial form), and not at the Ku-Band proposed by Google. Even if they could be made to work at Ku-Band, there would be a loss in efficiency making transmission from Earth to space next to impossible and the fact that the antennas can only be steered around +/- 45 degrees will mean that some satellites, even when in view, will not be able to be connected to.

There is a suggestion that Google's purchase of Skybox will provide a potential platform for an early launch of the WorldVu space segment. One the one hand it makes some sense. If you are launching one satellite, why not make it multi-purpose. Though it would increase the size and weight of the satellite, and the launch cost too, having the cameras directly connected to the Internet might make sense. Then again, eyes in the sky connected to the Internet is eerily similar to the world-changing paradigm that is posited in Arthur C. Clarke's book, The Light Of Other Days. Big Brother will definitely be able to watch you, as will your neighbour, your partner, the government, and anyone else with voyeuristic tendencies who wants to. Who's zoomin (in on) you?

add comment

( 590 views )

| permalink

|

( 2.9 / 1832 )

( 2.9 / 1832 )

( 2.9 / 1832 )

( 2.9 / 1832 )

Wednesday 11 June, 2014, 09:00 - Spectrum Management

Posted by Administrator

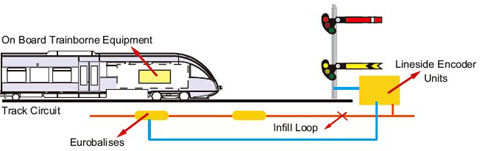

You would have thought that those designing systems that use the radio spectrum would check that the frequencies they planned to use would not cause interference to other systems and equally importantly that they would not suffer interference from other users. Such basic compatibility checks are critical to ensure that different communication systems can inter-operate successfully. So it is a bit of a surprise to find that the designers of the Eurobalise, a technology that forms part of the European Rail Traffic Management System and whose purpose is to assist in the control of train movements (to control their movement and help them know where they are) has chosen a frequency which fails these simple safeguards.Posted by Administrator

Where they have gone wrong is to use a frequency for transferring information between the Eurobalise and the train that is in a European broadcast band!

The Eurobalise uplink operates on a centre frequency of 4234 kHz, with a frequency deviation of +/- 282 kHz. This means that a logic '1' is sent on a frequency of 4516 kHz and a logic '0' is sent on a frequency of 3952 kHz (source: Mermec Eurobalise specification). Interfering signals on, or near, frequencies of 3952 or 4516 kHz would cause the most trouble, but as the Balise's receiver is listening across the whole range 3952 to 4516 kHz, any transmission in this range would cause a problem. The (European) 75 metre broadcast band runs from 3950 to 4000 kHz. Any broadcasts in the 75 meter band could therefore cause a problem to nearby trains, but those on frequencies from approximately 3950 to 3955 kHz will have the greatest potential to interfere with the Balise's operation.

The Eurobalise uplink operates on a centre frequency of 4234 kHz, with a frequency deviation of +/- 282 kHz. This means that a logic '1' is sent on a frequency of 4516 kHz and a logic '0' is sent on a frequency of 3952 kHz (source: Mermec Eurobalise specification). Interfering signals on, or near, frequencies of 3952 or 4516 kHz would cause the most trouble, but as the Balise's receiver is listening across the whole range 3952 to 4516 kHz, any transmission in this range would cause a problem. The (European) 75 metre broadcast band runs from 3950 to 4000 kHz. Any broadcasts in the 75 meter band could therefore cause a problem to nearby trains, but those on frequencies from approximately 3950 to 3955 kHz will have the greatest potential to interfere with the Balise's operation. Do any such transmissions exist? According to short-wave.info, the BBC and Korean broadcaster KBC use a frequency of 3955 kHz on a daily basis, from the BBC's transmitter at Woofferton, Shropshire. If you click on the link (which will take you to Google maps) you will notice that running alongside the village of Woofferton is a grey line - a railway!

But surely fears of interference are unfounded and just another example of scare tactics by spectrum managers bent on safeguarding their highly paid jobs. Sadly not... It appears that the transmissions from Woofferton have been disrupting trains between Leominster and Ludlow! According to the article in the Hereford Times, Network Rail, the organisation responsible for operating the rail infrastructure in the UK, claim:

while the interference does not pose a risk to the safe operation of the railway, it has been stopping trains en-route.

Oops!

The only other high power transmitter in this band in Europe is at Issoudun in central France. A quick check of Google maps shows that there are no train tracks in the immediate vicinity. There is a transmitter in Kall-Krekel in north west Germany which also uses frequencies in the 75 metre broadcasting band that could also cause interference to Eurobalises, but that transmitter uses much lower power than those at Woofferton or Issoudun.

The only other high power transmitter in this band in Europe is at Issoudun in central France. A quick check of Google maps shows that there are no train tracks in the immediate vicinity. There is a transmitter in Kall-Krekel in north west Germany which also uses frequencies in the 75 metre broadcasting band that could also cause interference to Eurobalises, but that transmitter uses much lower power than those at Woofferton or Issoudun.Maybe, given that there is only one potential location where the choice of frequency, and proximity to a broadcast transmitter, could be a problem, the designers did do their homework after all and decided that it was alright for occasional problems to arise. Maybe. Then again, the other frequencies used by the Eurobalise include a military band and the middle of the 27 MHz Citizens Band!

Last night’s BBC Watchdog programme discussed the issue of the apparently poor WiFi connectivity available on a number of inter-city train routes across the UK. The programme conducted a survey of the paid-for WiFi service of three long-distance train operators. They measured the percentage of the journey for which a connection was available, and the time it took to download a short file. The average results, together with the current tariffs for WiFi on the three train companies surveyed are shown below.

Last night’s BBC Watchdog programme discussed the issue of the apparently poor WiFi connectivity available on a number of inter-city train routes across the UK. The programme conducted a survey of the paid-for WiFi service of three long-distance train operators. They measured the percentage of the journey for which a connection was available, and the time it took to download a short file. The average results, together with the current tariffs for WiFi on the three train companies surveyed are shown below.| Train Operator | Connection Available | Time To Download A File | Price |

|---|---|---|---|

| Cross-Country | 96.7% | 39 seconds | £2 for 1 hour, £8 for 24 hours |

| East Coast | 79.4% | 13 seconds | £4.95 for 1 hour, £9.95 for 24 hours |

| Virgin Trains | 82.2% | 112 seconds | £4 for 1 hour, £8 for 24 hours |

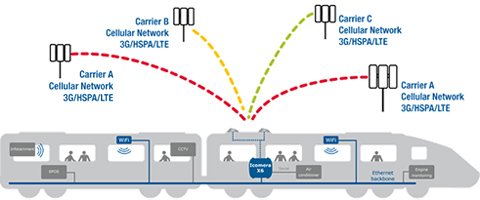

WiFi services on trains are provided by using antennas on the roof of the trains to connect to mobile networks. These mobile internet connections are then shared amongst all the WiFi users on a train. Companies such as Icomera and Nomad Digital provide boxes that enable multiple mobile internet connections to be combined together to increase the speed of the connection as a single 3G or 4G connection is not going to cut it when shared between multiple WiFi users.

In the programme, self-proclaimed IT Guru Adrian Mars then goes on to explain that the problem with the East Coast and Virgin services is that they make use of the signal from just one mobile operator and share that amongst all the WiFi users on the train, whereas Cross-Country use connections from multiple operators. This would explain why the time for which a connection was available on Cross-Country’s WiFi service was so much higher than on the other two, as they can make use of the overlapping coverage provided by multiple operators.

It is also true to say that many of the UK’s inter-city train routes pass through very sparsely populated areas, as well as tunnels and deep cuttings, where there is unlikely to be much in the way of a mobile signal. Given the different routes taken by Cross-Country, East Coast and Virgin Trains it is therefore unfair to directly compare them as each route will have a different proportion of these hard-to-get-at areas. 3G and 4G mobile networks also don’t work as well at high speed and (usually) trains travel at speeds that are fast enough to begin to affect performance.

It is also true to say that many of the UK’s inter-city train routes pass through very sparsely populated areas, as well as tunnels and deep cuttings, where there is unlikely to be much in the way of a mobile signal. Given the different routes taken by Cross-Country, East Coast and Virgin Trains it is therefore unfair to directly compare them as each route will have a different proportion of these hard-to-get-at areas. 3G and 4G mobile networks also don’t work as well at high speed and (usually) trains travel at speeds that are fast enough to begin to affect performance.Where the Watchdog’s IT guru did go astray was to suggest that it might be better for train passengers to rely on the connection to their own mobile phone for internet rather than the on-train WiFi. Why is this wrong? There are two main reasons. Firstly, the antennas used by the on-train WiFi systems are mounted on the train roof, whereas your phone will be lower down, inside the carriage. A previous Wireless Waffle article highlighted the need to get high to improve reception and the signal on the roof of the train will be bigger than that inside by dint of this fact alone.

But there is a much bigger problem… trains are typically constructed of metal. Some, including Virgin Trains’ Pendolino trains, have metallised windows. Passengers are thus enclosed in a Faraday cage which will do a grand job of stopping any signals on the outside of the carriages from making their way into the carriages. According to a paper written by consultants Mott MacDonald for Ofcom:

In modern trains the attenaution[sic] can be up to -30dB.

This means that of the signal presented to the outside of the carriage, only one thousandth of it makes it inside the carriage. Add this immense loss to the difference in height between the roof-mounted antenna and you sat in the carriage and it becomes apparent why using your own phone is highly unlikely to yield a better connection than that available through the on-train WiFi.

The Watchdog team suggested that due to the poor quality of the on-board WiFi it should be offered for free instead of making passengers pay. Many fare-paying train passengers would no doubt express a lot of sympathy with this suggestion. The cost to the rail companies of doing this is not trivial. East Coast, it was claimed, are upgrading their on-board WiFi to the tune of £2 million which compared to the paltry £7m profit they made in 2012/13 is quite a bite. But given the choice of free sandwiches (whose quality is as notoriously dubious as that of the WiFi connection) or free WiFi, most would surely prefer to enhance their digital diet instead of their gastronomic girth.

The Watchdog team suggested that due to the poor quality of the on-board WiFi it should be offered for free instead of making passengers pay. Many fare-paying train passengers would no doubt express a lot of sympathy with this suggestion. The cost to the rail companies of doing this is not trivial. East Coast, it was claimed, are upgrading their on-board WiFi to the tune of £2 million which compared to the paltry £7m profit they made in 2012/13 is quite a bite. But given the choice of free sandwiches (whose quality is as notoriously dubious as that of the WiFi connection) or free WiFi, most would surely prefer to enhance their digital diet instead of their gastronomic girth.  It could be argued that before the switch-over from analogue to digital television broadcasting, the value of terrestrial broadcasting was on the decline. Faced with fierce competition from cable and satellite, each offering 10 or more times the number of programmes, terrestrial television was a poor cousin whose main use was often to deliver public service broadcasting and, through general interest obligations imposed by national governments, to provide an accessible (free-to-air) service to 95% or more of a country's population (measured both geographically and demographically).

It could be argued that before the switch-over from analogue to digital television broadcasting, the value of terrestrial broadcasting was on the decline. Faced with fierce competition from cable and satellite, each offering 10 or more times the number of programmes, terrestrial television was a poor cousin whose main use was often to deliver public service broadcasting and, through general interest obligations imposed by national governments, to provide an accessible (free-to-air) service to 95% or more of a country's population (measured both geographically and demographically).As digital switch-over has taken hold, terrestrial broadcasting has had a repreive and is now able to offer true multi-channel television. Where previously it was only possible to broadcast a single television station on a single frequency, that frequency can now hold 10 or more standard definition (SD) channels, or 4 or 5 HD channels. Where there may have been 6 analogue stations on air, there can now be upwards of 60 stations. In many cases, 60 stations is enough for the average viewer and the bouquets of channels offered on cable or satellite may now begin to seem expensive compared to free-to-air DTT. In many countries the draw of cable and satellite TV is no longer the sheer variety of channels available, but the premium content that is on offer. Pay-TV services offering sport and movies continue to be popular, but such premium content is not usually available on DTT. Nonetheless, for many viewers DTT is perfectly sufficient.

But just as digital terrestrial TV (DTT) has had a new lease of life, the other broadcasting platforms are once again biting at its heels with new service offerings. Whilst 3D television seems to have taken a back seat for the time being, new, even higher definition television, is stepping to the fore. Ultra-High Definition (UHD) has twice the resolution of standard HD and large UHD televisions are already on show and on sale in many retailers. At present there is minimal UHD content, however a hand full of new UHD channels are being launched and, for example, the World Cup football in Brazil will be broadcast in UHD.

But just as digital terrestrial TV (DTT) has had a new lease of life, the other broadcasting platforms are once again biting at its heels with new service offerings. Whilst 3D television seems to have taken a back seat for the time being, new, even higher definition television, is stepping to the fore. Ultra-High Definition (UHD) has twice the resolution of standard HD and large UHD televisions are already on show and on sale in many retailers. At present there is minimal UHD content, however a hand full of new UHD channels are being launched and, for example, the World Cup football in Brazil will be broadcast in UHD. To be able to see the difference that UHD makes compared to standard HD, a very large television set is needed (42 inches or greater) and it could therefore be argued that UHD will always be a niche product. Then again, many broadcasters believed that HD would be a niche, but it is becoming the de facto standard and average television sizes are on the increase.

Technically speaking, UHD requires a bit-rate of around 20 Mbps. Whilst such bit rates are relatively easy for cable and satellite networks to deliver, broadcasting UHD over DTT would require at least half of a DVB-T2 multiplex, and the most advanced video (HEVC) codecs. In practice this means that were a terrestrial frequency currently carries 10 or more SD, or 4 or 5 HD channels, it might, at best, be able to offer 2 UHD channels.

But that is not the end of the story. Just around the corner is super-high vision (SHV), sometimes called 8K, which once again doubles the resolution of the picture compared to UHV. SHV will require around 75 Mbps to be broadcast and at this point, whilst cable and satellite are still in the game, DTT is no longer able to broadcast even a single programme on a single frequency (without very complex transmitter and receiver arrangements that would require, for example, householders to install new, and potentially more than one, antenna). Of course to benefit from SHV, an even larger TV screen will be necessary, but with the growth in home cinema, it is can perhaps be expected that in time, a goodly proportion of homes will want access to material in this super high resolution.

But that is not the end of the story. Just around the corner is super-high vision (SHV), sometimes called 8K, which once again doubles the resolution of the picture compared to UHV. SHV will require around 75 Mbps to be broadcast and at this point, whilst cable and satellite are still in the game, DTT is no longer able to broadcast even a single programme on a single frequency (without very complex transmitter and receiver arrangements that would require, for example, householders to install new, and potentially more than one, antenna). Of course to benefit from SHV, an even larger TV screen will be necessary, but with the growth in home cinema, it is can perhaps be expected that in time, a goodly proportion of homes will want access to material in this super high resolution.So where does that leave DTT? Arguably, within a few years, it will once again be unable to compete with the sheer girth of the bandwidth pipe that will be provided by cable and satellite networks. It's probably worth noting at this point that most IP-based video services (with the possible exception of those delivered by fiber-to-the-home) will also be unable to deliver live SHV content. This time there will be no reprieve for DTT as it simply does not have the capacity to deliver these higher definition services.

What is therefore to be done with DTT? Is it necessary to provide continued public service, universal access, free-to-air services that were the drivers for the original terrestrial television networks? Is its role to provide increased local content which might be uneconomic to broadcast over wide areas? Should it be used to deliver broadcast content to mobile devices where it has more than sufficient capacity to provide the resolution needed for smaller screens? Or, should it be turned off completely, and the spectrum it occupies be given over to something or someone else?

In countries where cable and satellite penetration is already high, there is arguably nothing much to lose by switching DTT off. In Germany, for example, RTL have already withdrawn from the DTT platform and there is talk of turning off the service completely. In countries that have not yet made the switch-over, it might be more cost effective to make the digital switch-over one that migrates to satellite (and cable where available) than to invest in soon-to-be-obsolete DTT transmitters.

Broadcasters should be largely agnostic to the closure of DTT. After all, their business is producing content and as long as it reaches the audiences, they ought not to care what the delivery mechanism is. Other radio spectrum users (e.g. mobile phones, governments) would surely welcome the additional spectrum that would become available. So who loses? Those companies who currently provide and operate the DTT transmitter networks, such as Arqiva in the UK, Teracom in Sweden and Digitenne in the Netherlands, who stand to lose multi-million pound (or Euro) contracts. For these organisations the stakes are high, but even the most humble economist would surely admit that the benefits elsewhere outweigh the costs. So let's turn off DTT - not necessarily today - but isn't it time to plan for a 'digital switch-off' to follow the 'digital switch-over'?

Broadcasters should be largely agnostic to the closure of DTT. After all, their business is producing content and as long as it reaches the audiences, they ought not to care what the delivery mechanism is. Other radio spectrum users (e.g. mobile phones, governments) would surely welcome the additional spectrum that would become available. So who loses? Those companies who currently provide and operate the DTT transmitter networks, such as Arqiva in the UK, Teracom in Sweden and Digitenne in the Netherlands, who stand to lose multi-million pound (or Euro) contracts. For these organisations the stakes are high, but even the most humble economist would surely admit that the benefits elsewhere outweigh the costs. So let's turn off DTT - not necessarily today - but isn't it time to plan for a 'digital switch-off' to follow the 'digital switch-over'?